Best Practices for

Embedded Security Testing

In embedded software, we need to make sure that growing complexity and software dependency come at no cost to security.

Table of Contents

- Why Is It So Hard to Secure Embedded Applications?

- Challenges of Embedded Software Testing

- Embedded Software Testing Tools and Technologies

- Automating Test Case Creation With Fuzz Data

- Mocking on Steroids: Testing Positive and Negative Criteria With Fuzz Data

- The Secret Sauce to Fuzzing Embedded Software: Instrumentation

- Enterprise Best Practice to Deal With Complexity: CI/CD-Integrated Fuzz Testing

Why Is It So Hard to Secure Embedded Applications?

Due to increasing connectivity and dependencies, modern embedded applications are constantly growing more complex. This complexity comes with implications for software security testing and requires plenty of manual effort, depending on the toolchain. From an operational perspective, many embedded industries (automotive, aviation, healthcare etc) are tightly staffed and work in long cycles with strict deadlines. When things get tight, this can cause time-critical matters to be prioritized over software testing.

From a technical perspective, embedded software security testing can be distinguished from other ecosystems as well. In the early stages, you usually test hardware, independently of the software, for compliance with physical requirements and standards (e.g., electromagnetic compatibility). Later in the process, your final hardware has to be tested for software development. For this purpose, most teams I've worked with either rely on developer boards or simulate the hardware in software. After completion, you integrate the software into the target platform and perform integration tests.

Hardware Dependencies and Requirements

Additionally, embedded software tests examine if the final product, including hardware and software, fulfills all functional and non-functional requirements. Usually, so-called robustness tests are also performed manually by the tester. Depending on the place of use, you’ll have to provide special evidence, such as high code coverage or modified condition/decision coverage (MCDC).

Many languages used in embedded, such as C/C++ are dependent on the hardware and operating system and can’t be cross-compiled. This leads to two significant problems:

1. Although the Public API documentation is available, you need to write plenty of test harnesses, which is incredibly time-consuming.

2. The connection between the hardware and hardware-dependent API requires testing to ensure that it can process unexpected inputs from the PublicAPI securely and under all circumstances.

Moreover, security tests have to prevent the application from crashing under all circumstances. They should cover all relevant states and behaviors, including edge cases caused by unexpected or erroneous inputs.

Challenges of Embedded Testing

Due to the interdependence of hardware and software in embedded systems, it is particularly important to test them for security and functional issues. However, it is precisely this interdependence that can also pose a few challenges. Here are seven common challenges in embedded software security testing:

1. Remote Hardware Access

One of the main challenges in embedded software testing is the reliance on hardware, which can be difficult to access due to limited physical availability, especially in the early stages of testing. Testing must often be conducted remotely using remote servers, preventing the testing team from having direct access to the hardware. Emulators and simulators are commonly used to mock embedded systems. However, there is always the risk of mimicking the actual device inaccurately.

2. The Variety of Potential Attack Vectors

There are a wide variety of potential attack vectors that can be exploited in an embedded system. These include:

-

Buffer Overflows: A buffer overflow occurs when more data is written to a buffer than it is designed to hold. This can result in overwriting other data in memory, leading to a crash or allowing an attacker to take control of the system.

-

Memory Corruption: Memory-Corruption occurs when data is written to memory in an unintended way. This can corrupt other data in memory, leading to a crash or allowing an attacker to take control of the system.

-

Code Injection: Injection vulnerabilities are a type of attack during which malicious code is injected into a program, allowing an attacker to take control of the system or cause it to crash.

It can be difficult to identify all the possible ways an attacker could exploit a system because of the sheer number of potential vulnerabilities.

3. Time and Resource Constraints

here are established and proven test approaches for the different stages of embedded software development (unit tests, integration tests, regression tests, hardware/software integration etc). Due to time and resource constraints, teams are often forced to leave out some of these testing efforts or to run them on a reduced scale. Nonetheless, accurately simulating real-world usage, requires testing a system under various conditions and with different input types. Automated testing tools for embedded software can help to ensure that testing gets the attention it needs in time-constrained projects.

4. Lack of Standardization in Embedded Software Systems

The uniqueness of embedded software systems makes it hard to standardize testing methods across use cases. Each system and each domain (e.g., automotive, avionics, medical, etc) has its own set of requirements and constraints. As such, it can be challenging to identify the type of testing approach that is necessary. Norms such as ISO/SAE 21434 in automotive or DO-178C in avionics are enforcing more standardization throughout their respective industries. In many instances, these norms require dev teams to adjust their development processes.

5. The Need for Real-Time Testing

Many embedded systems need to react in real-time. In many cases, a delay in response to an external event is considered a failure. This requires the system to be tested under conditions that are representative of its actual usage. A huge challenge of embedded software testing is simulating these conditions. Advanced mocking is one approach that is proven effective in achieving this.

6. Lack of Automated Testing Tools

Testing embedded systems manually can be time-consuming. Automated testing can help to speed up the testing process and improve accuracy. However, it can be difficult to automate tests for embedded systems due to the unique nature of each system. Especially in automotive, many embedded development teams, therefore, opt for CI/CD-integrated fuzz testing to automatically test positive and negative criteria at each pull request. Automated testing can also help explore the application and find security vulnerabilities that developers may not have considered.

7. The "Security vs Safety" Tradeoff

Many embedded systems need to be designed with both security and safety in mind. However, these two goals can often be at odds with each other. Safety mainly refers to fail-safe behavior and reliability, while security mainly refers to preventing unauthorized access to sensitive data. For example, adding security features to a system can make it more complex and difficult to test, impacting its safety. As such, embedded software teams require adequate tooling to uncover security and safety issues all the same.

Embedded Software Testing Tools and Technologies

Broadly speaking, there are two kinds of embedded testing tools: static analysis and dynamic analysis. Which combination of tools and technologies fits your development process best will be largely specific to the projects you deal with. Finding the right setup will help you alleviate a large chunk of the challenges listed above. Here are some options:

Static Analysis Technologies and Tools

Static analysis is a method of evaluating source code without execution. This is particularly useful for embedded C/C++ code, as the system under test does not need to be compiled or deployed in production. These systems often have limited resources and are not as user-friendly for developers. Advantages include:

- Being fast, as no compilation is required

- Compliance with code styles and guidelines

- Finding code smells and issues by parsing the code

- Providing code coverage data

- Testing and analyzing code in the development environment

Two popular methods for performing static code analysis in C++ are linting tools and utilizing compiler tools.

Linting

Linting is a process of checking source code for bugs, defects, and formatting issues, such as unused libraries in C/C++ or other languages. It's useful for finding errors when updating compilers and ensuring style guidelines are followed to prevent miscommunication on software projects. There are specialized linters for C++, such as Clang-tidy, which finds and automatically fixes issues, and the Intellisense Code Linter developed by Microsoft for Visual C++.

Using Compiler Tools and Flags

Compilers play a crucial role during development. Not only can you use them to produce executable programs but also for static analysis of the code base. Compilers flag lines of code with warnings (potential issues) and errors (issues that prevent compilation). For testing, you can configure your compilers to mark all warnings or just specific warnings as errors. However, tooling options can be confusing and specific to different compilers such as Clang, GCC, G++ and Visual C++. Using compiler tools is effective but requires some expertise. It is best done alongside other testing methods.

Dynamic Analysis Technologies and Tools

Dynamic Analysis tools test software for vulnerabilities by running it with randomized or predefined inputs using tools such as libfuzzer. The goal is to trigger unexpected or unusual behavior. Advantages include:

-

Black-box approaches for dynamic analysis do not require the source code of an application to test it

-

Code can be executed on both production and development environments for comparison

-

Detecting runtime issues

-

Testing with real integrations and dependencies

Two popular methods for dynamic code analysis are unit testing and fuzz testing.

Unit Testing

Unit testing is a technique in which automated tests are written by developers to evaluate the functionality of a specific unit of code (e.g., a function or class). There are various approaches to unit testing, such as test-driven development, during which tests are written first to guide the development process. Another approach is using unit tests as regression tests to identify bugs introduced into previously working code. Unit testing is particularly useful for C/C++ developers working on large projects, as it allows you to test specific portions of the codebase without having to compile the entire project. In C++ for example, CppUnit, Catch2, and Boost are popular solutions for unit testing. Visual Studio also provides a built-in C++ testing solution.

Fuzz Testing

Fuzz testing is a dynamic analysis method that involves feeding invalid or random data, known as "fuzzy" data, into the software under test and observing how it behaves. Similar to unit testing, the software is tested under various scenarios. The key difference is that unit testing is deterministic, meaning that the expected outcome is known in advance, while fuzz testing is non-deterministic or exploratory, meaning that the expected outcome is not necessarily known ahead of time. This makes fuzz testing useful for uncovering bugs and security vulnerabilities that may not have been identified through other testing methods.

There are different techniques to simulate embedded dependencies using fuzz testing, such as fuzzing hardware-dependent functions with static values or using feedback-based fuzz data to mock the behavior of external sources in a realistic environment. You can then combine these techniques with other dynamic and/or static test methods to achieve even more comprehensive results.

Automating Test Case Creation With Fuzz Data

In my experience, writing test cases that adequately cover relevant program states and behaviors manually takes time and bears the risk of missing things. A mocking approach based on fuzz data is a proven effective way to speed things up and pinpoint issues more accurately. Instead of manually trying to come up with test cases, this approach uses fuzz data to automatically generate invalid, unexpected or random data as input for your embedded application. This way, you can simulate the behavior of your embedded software units under realistic circumstances.

To make sure that your fuzzer is not just throwing random test inputs at your application, you should opt for tools that are capable of leveraging information about the software under test to refine fuzz data. Such a so-called “white-box” (or grey-box) fuzzing approach enables your fuzzer to maximize test coverage based on runtime information from previous inputs and thereby trigger (almost) all relevant program states.

This approach to embedded software security testing is much faster and more accurate than any form of manual testing or dumb fuzzing. Below I explained this in more detail.

Start Fuzzing Your Own Code

CI Fuzz is a fuzzing tool, that allows you to run feedback-based fuzz tests using a few simple commands. Try it out and fuzz your C/C++ code within a few minutes.

Mocking on Steroids: Testing Positive and Negative Criteria With Fuzz Data

To test an application with dynamic inputs, you would usually have to compile and run it first. But with embedded software, you often have the problem that it only runs on specific hardware or that the application requires input from external sources. For this reason, mocking/simulating these dependencies is needed for DAST, IAST, or fuzzing.

A bare-bones approach to testing embedded systems can also be mocking for the hardware-dependent functions to return static values. However, this setup is basically blind, as it lacks runtime context, causing it to miss many possible behaviors, resulting in comparably low code coverage.

As described above, a more accurate method for embedded security testing is by mock testing your embedded systems with fuzz data. This allows you to dynamically generate return values for your mocked functions, so you can make use of the magic of feedback-based fuzzing to simulate the behavior of external sources under realistic conditions. Also, this approach lets you cover both positive and negative test cases during your tests. If you have the resources, I would always recommend combining different DAST, IAST, and fuzzing approaches.

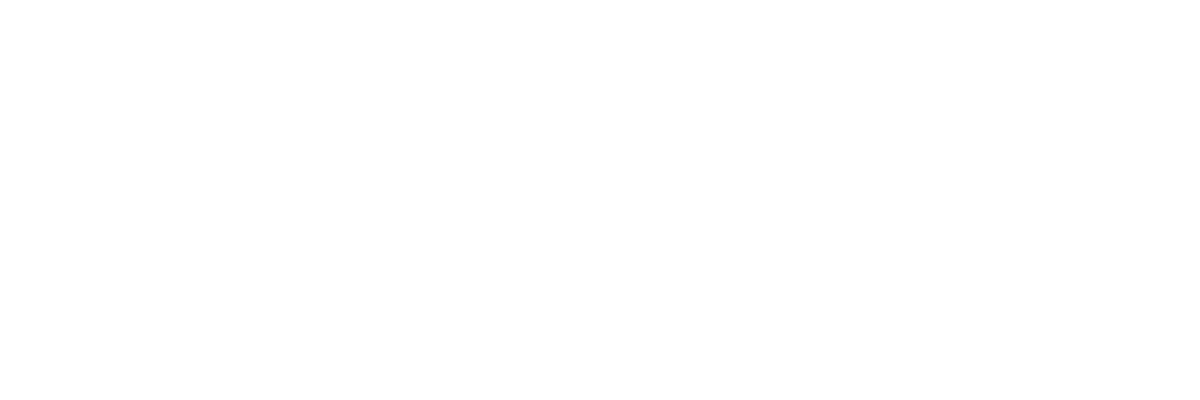

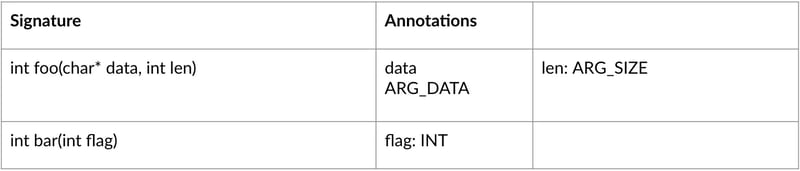

Two Excel sheets can be enough to generate a fuzz test that will achieve high code coverage while using fuzz data to simulate the input from outer sources

The Secret Sauce to Fuzzing Embedded Software: Instrumentation

One of the reasons why modern fuzzing technology is so effective is that it can leverage feedback from previous test inputs to increase code coverage. To achieve this, the source code has to be instrumented using sanitizers. A sanitizer is a software library used to compile code with the goal of making programs crash more often. By receiving information about the program under test from these sanitizers, the fuzzing engine can constantly craft more effective test inputs.

Leveraging the API Documentation to Generate Fuzz Tests

You will most likely need some form of structured input to automatically generate your test harnesses. To determine what kinds of input may be suitable for testing, use the documentation of the Public API. In my experience, most software teams that work on embedded hardware have such documentation stored as a CSV or Excel table. This documentation can be used to automatically create sophisticated fuzz tests without any further adaptations.

Your fuzzer then starts by calling functions from your Public API documentation file in random order, with random parameters. Through instrumentation, the fuzzer can then gather feedback about the covered code. Based on this feedback, it can actively adapt and mutate the called functions and parameters to increase code coverage and trigger more interesting program states. This setup will allow you to generate test cases you might not have thought of that can traverse large parts of the tested code.

Industry Standards for Embedded Software Testing in AutomotiveThe automotive industry is subject to many industry norms. Many of them, such as ISO/SAE 21434 or WP. 29, include requirements for software security testing. A large number of these norms recommend integrating modern fuzz testing into the development process as a measure to comply with their requirements. Especially ISO/SAE 21434 has presented the automotive industry with many new challenges in recent years. Based on our experience with customers, my colleagues created an overview of how modern fuzzing technology can help automotive software teams become ISO 21434 compliant while building more secure software.

|

3 Reasons Why You Should Fuzz Embedded Systems

In my experience, fuzzing is one of the most effective ways to test embedded systems. Especially because the margin of error is very small in many embedded industries, as software defects do not only affect the functionality of systems, they can have a physical impact on our lives(e.g., in automotive brake systems).

1. Increased Code Coverage

By leveraging feedback about the software under test, modern fuzzers can actively craft test inputs that maximize code coverage. Apart from reaching critical parts of the tested code more reliably, this enables highly useful reports that let dev teams know how much of their code actually was executed during a test. This reporting may be helpful in identifying which parts of their code need additional testing or more inspection.

2. Detecting Memory Corruption Accurately

Uncovering memory corruption issues is one of the strong suits of feedback-based fuzzing which is highly relevant for memory-unsafe languages such as C/C++(nonetheless, fuzz testing is also very effective at securing memory-safe languages). By feeding embedded software with unexpected or invalid inputs, modern fuzzing tools can uncover dangerous edge cases and behaviors related to communication with the memory.

3. Early Bug Detection

Feedback-based fuzzing tools can be integrated into the development process to test your code automatically as soon as you have an executable program. By leveraging the bug-finding capacities of fuzzing early, you can find bugs and vulnerabilities before they become a real problem.

Example: If you find a bug in a JSON parser during unit testing, you can most likely fix it in a couple of minutes. This is relatively easy compared to fixing a crash that was possibly caused by a specific user input that only affects one certain component. So do yourself a favor and fix the bugs before they pop up in production.

Enterprise Best Practice to Deal With Complexity: CI/CD-Integrated Fuzz Testing

I recommend CI/CD-integrated fuzz testing for enterprise projects, as it allows you and your team to fuzz your code automatically at each code change. Ideally, you would implement such a testing set-up early in the development process to avoid late-stage fixes and to speed up the development process. I think Thomas Dohmke, CEO of GitHub, put it best when he said that modern fuzzing tools are "like having an automated security expert who is always by your side."

Anyways, if you want to find out more about how you can set up fuzz testing for large automotive projects, you can book a personal demo with one of my colleagues or check out our product page.